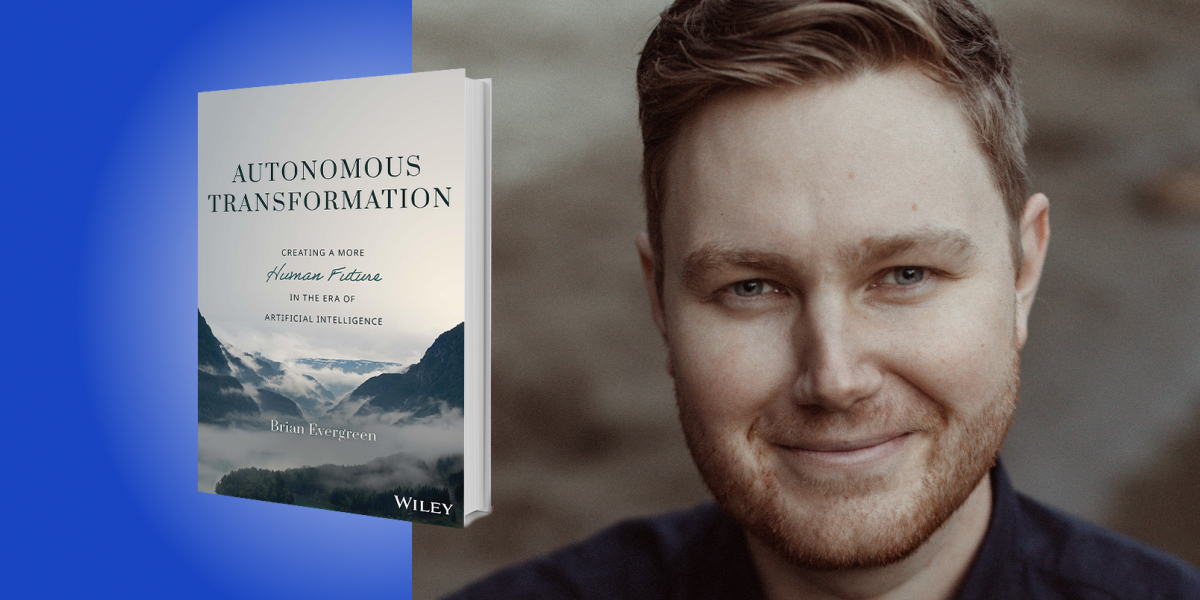

Brian Evergreen is the founder of The Profitable Good Company, a leadership advisory company that partners with and equips leaders to create a more human future in the era of artificial intelligence. Evergreen has held leadership positions in artificial intelligence strategy at Microsoft and Accenture, and is a Senior Fellow at The Conference Board. He is also a guest lecturer at the Kellogg School of Management and Purdue University.

Below, Brian shares five key insights from his new book, Autonomous Transformation: Creating a More Human Future in the Era of Artificial Intelligence. Listen to the audio version—read by Brian himself—in the Next Big Idea App.

1. The next era of transformation goes beyond digital transformation.

We have been working to transition from analog to digital paradigms, and the next step is to transition from digital to autonomous. Digital transformation has reached a point where it no longer has meaning—it has evolved to mean anything related to technology. Therefore, I researched and developed new phrases to add more clarity to this picture.

If you go back to the roots of transformation, it means to fundamentally change the nature or composition of something. We’ve been calling all of these initiatives Digital Transformation, but what about the vast majority of these initiatives where the nature or composition is not changed necessarily, just more efficient?

The word that best applies to this scenario is reformation—vastly improving a product or process without changing its structure or composition. We now have five terms we can use to describe these initiatives with greater clarity. The first is Digital Reformation, which we have seen in the airline industry. We book and take flights the same way, it’s just more efficient now. The second is Digital Transformation, such as Netflix transforming how we experience entertainment. The third is Autonomous Reformation, which is moving from an analog or digital to autonomous paradigm without changing the process, product or structure, such as robotics in warehousing—it’s much more efficient and safer for humans, but it’s the same process. The fourth term is Autonomous Transformation, which several organizations are actively working toward, but we have yet to have the lighthouse example, where autonomous systems have transformed the human experience or created a new market category. The fifth and last term is Creation.

2. It is time to end the Industrial Revolution and disinherit ourselves from the mechanistic system of leadership.

Why are only 13 percent of artificial intelligence initiatives successful? One might assume that the reason would be technical, or have to do with industry applications or economic incentives. What I discovered is that creating a more human-centered organization is not just a nice-to-have for the executives who want to do good in their organizations and for their customers. It’s actually the differentiator between organizations who are seeing traction with advanced technologies and those who are not. The easiest way for an organization to make that shift is to make one core mindset shift.

I’ll share an example: What do you do when a machine goes down? You eliminate the deficiencies, and once they are gone, the machine turns back online. That doesn’t translate into a leadership context because a team or organization is not a mechanistic system, it’s a social system. While well-intended leaders and thought-leaders are talking about the next cycle of the industrial revolution, I argue that we need to make an intentional mindset shift. We need to begin to recognize all the areas within our teams and organizations where we are leading a social system as if it’s a mechanized system.

“In the future, the human must be first.”

An example would be Disneyland. Disneyland is an intricately balanced social system. The cast members, the name Disney gives to its employees who run the park, are an integral part of the experience. Imagine if every cast member stopped being friendly with guests, acting impatient or disinterested. The mechanistic approach would be a mass layoff and replacement. The social systemic approach begins with acknowledging there are social dynamics at play, and examining if there is something about Disney’s training, policies, management, etc. that could be causing broad-sweeping discontent. This effectively sets a strategy for the social culture of Disneyland with the same degree of rigor as a merger and acquisition or a technology strategy.

I learned this through practical experience, working with and seeing what was and wasn’t working for many of the world’s most valuable companies. They weren’t describing it this way at the time, but the organizations who were getting traction with AI were focusing on the interactions between their data scientists and their domain experts, for example—a social dynamic. The organizations who were having the most difficulty harnessing the potential of these technologies were usually made up of people who cared about other people, and they were generally quite aware of social dynamics. But the moment their strategy or management hat came on, social dynamics disappeared from their chessboard and they could only seriously consider technical or financial moves.

Frederick Taylor famously wrote in 1911, “In the past, the man has been first, in the future, the system must be first.” He was able to realize his vision and it’s still pervasive today. One hundred and twelve years later, can now say that in the past, the system has been first. In the future, the human must be first.

3. Future Solving.

Imagine asking a group of executives and thought leaders today “What should the first step be in a given fiscal cycle or strategy setting?” The likely answer is that you need to begin with what problems you want to solve. But problem solving is the craft of getting rid of what you don’t want and has no bearing on what you do want.

Bottoms-up planning is a commonly practiced planning cycle in which each team lists the problems they want to solve, which are down-selected and grouped by their leadership, and so on until it reaches the top of the organization. This is an example of the dynamic of Maintenance Mode, stuck in a cycle of trimming, pruning, and making incremental improvements to existing value propositions. This is a downstream impact of a mechanistic mindset, but this will never lead to an innovative breakthrough.

Since problem solving is the craft of getting rid of what you don’t want, Future Solving is the craft of getting what you do want. It begins with envisioning the future of your team, your organization, or your market. What future do you want? For some organizations it might be to guarantee there’s no child labor in their supply chains, others may want to improve workplace safety, others may want their customers to be so delighted with their customer care experiences that they are more likely to stay with the brand even if there are product or process issues down the line.

“Future Solving is the craft of getting what you do want.”

Once the vision for the future is set, the next step is to ask, “What would have to be true for this to happen?” You then work backwards from the future, employing our most core traits as humans of empathy, creativity, logic, and reasoning. That creates a clear path from that future to the starting point of today, which significantly clarifies investments. Working backwards is akin to sitting and creating a strategy in a chess game before picking up a piece. Your queen may be in danger, and you could simply solve the problem by moving her to safety. But if you have a vision for what you’re working toward, you can move the queen to safety and advance your position toward your end goal.

4. Replace data-driven decision-making with reason-driven decision-making.

Making decisions based on data is a rational idea. Being data-driven is a significant improvement on gut-driven decision-making. But it has stifled innovation in organizations for decades. In its current form, data-driven decision-making is an inherently mechanistic and unscientific paradigm. If a leader asks one of their teams to provide a data-driven recommendation, what they are ultimately asking is that their team produce a set of numbers on which the leader will justify their decision. The logic and reasoning of the team is not accounted for in what is approved, and the team is ultimately serving in a computational capacity.

Gate-keeping decisions through a data-driven lens sounds scientific on the surface, but in practice, it has become unscientific. The scientific method begins with asking a question, followed by stating a hypothesis, conducting an experiment, analyzing the results, and making a conclusion. Throughout the process, data is collected to inform the analysis and conclusion.

In the prevailing process for organizational decision-making today, the process has become: asking a question, stating a hypothesis, gathering data from past experiments and experiments others have conducted through competitive analysis, assessing target addressable market, etc., analyzing the results, making a conclusion (i.e. the investment decision), and then conducting an experiment—but rather than calling it an experiment, it’s tied to specific metrics that impact the careers of the team assigned to execute the “ROI-proven-in-advance initiative.”

In a surprising twist, investments in artificial intelligence, coming in at a dismal 13 percent success rate, have shined a spotlight on an important truth for leaders today: this process is not only unscientific, but it isn’t working. It is time for a new approach—one that is more scientific and rehumanizes decision-making—fit for purpose in the 21st Century.

Reason-driven decision-making places human reasoning at the top of the hierarchy of decision-making, on the foundation of data-driven methodologies, by adjusting investment criteria to acknowledge the limits of the empirical data, accounting as rigorously for the logic and reasoning of the humans who prepared the strategy and recommendation, as well as the logic and reasoning of those in support of the decision and those against it. Leaders then sign off not only on the numbers, but on the logic and rationale of the experts on their teams and in their peer groups.

5. The reformational economics of omission versus commission.

Most people are familiar with the fact that Blockbuster passed on opportunities to acquire Netflix in 2000. What most people do not know is that Blockbuster built a streaming service 12 years before Netflix did that never made it to market. Ron Norris was a consulting executive whose team had designed, built, and successfully piloted the first streaming service on behalf of Blockbuster (called Blockbuster on Demand). In 1995, he received a call from a senior executive with oversight of the program. He expected a congratulations, gratitude, and questions about how quickly he and his team could bring this to market. Instead, he was directed to cancel the initiative altogether.

The executive informed him that the new offering would eliminate late fees, which accounted for 12 percent of Blockbuster’s revenue and was therefore not a suitable path forward. When Blockbuster filed for bankruptcy in 2010, this executive may have remembered the conversation he had with Norris and imagined how differently things would have turned out had he not chosen to cancel the initiative. One thing is certain, however: that decision, despite disastrous consequences to the organization, had no negative impact, aside from opportunity cost, on the finances or reputation of the executive responsible.

“Errors of omission have a higher impact than errors of commission.”

This is because leaders and organizations are measured on their errors of commission, actions they took, but not their errors of omission, actions they did not take. It is even likely that this executive included a bullet point about protecting 12 percent of annual revenue in his annual review.

Errors of omission have a higher impact than errors of commission. They are a shadow force with the power to end an organization. As organizational leaders make decisions, they are not aware of what they are not seeing—the risks they are not taking and learning from. Their errors of commission and their successes become a self-affirming loop until an internal leader disrupts the pattern, or the accumulated errors of omission have grown large enough to pave the path for a new organization to rise and overtake the legacy organization in the market.

A social systemic alternative to this approach would be to account for every act of omission alongside every act of commission. Those on either side of the hierarchy of a given decision-maker can then present decisions to be made. Those decisions, including the other options that were presented, can be recorded.

On a regular cadence, the decision-maker and team can review the impact of the acts of commission together with the considered impacts of the acts of omission, which can be researched in advance of the meeting. This could create a forum in which the team can discuss and learn. Through this process, decision-making capabilities of the whole social system can improve.

Imagine if, in considering which leader should be promoted to the executive leadership team, the deciding committee could look to the organization’s accounting system for a report on the net impact that leader has had on the organization. This report would include not only the funded initiatives and whether or not they were successful, but it would also include the initiatives that were not funded and acquisitions or partnerships that did not move forward. This would create a more holistic picture of the individual’s ability to discern strategic and tactical investments on behalf of the organization.

To listen to the audio version read by author Brian Evergreen, download the Next Big Idea App today: