Anil Ananthaswamy was a software engineer who transitioned careers to become an author and science journalist. He was formerly a staff writer and deputy news editor for New Scientist and writes regularly as a freelancer for New Scientist, Quanta, Scientific American, PNAS Front Matter, and Nature. His other books include The Edge of Physics, The Man Who Wasn’t There, and Through Two Doors at Once.

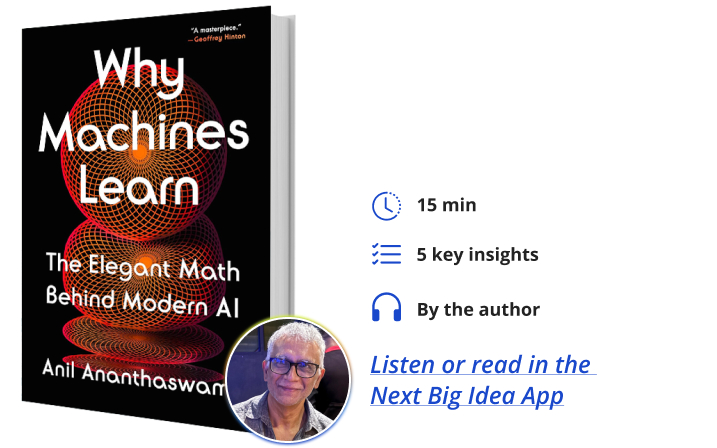

Below, Anil shares five key insights from his new book, Why Machines Learn: The Elegant Math Behind Modern AI. Listen to the audio version—read by Anil himself—in the Next Big Idea App.

1. Machines can learn.

I completed degrees in electronics and computer engineering in the days before machine learning and artificial intelligence. Back then, software engineers like me had to figure out the algorithms needed to solve problems. But that has started changing with the advent of machine learning.

Sometime around 2015, I found myself writing more articles about how machine learning is used in various science fields, such as astronomy and medicine. The dormant software engineer within me awoke. I wanted to learn how to build these systems, not just write about them.

My journey to understand AI began in earnest in 2019 when I became a Knight Science Journalism fellow at MIT. For my project, I decided to teach myself some machine learning and AI. It had been almost 20 years since I had done any programming or coding. Things had changed. So, I went back to school and sat with teenagers at MIT, taking classes in Python programming.

For my fellowship project, I coded a simple deep artificial neural network. Artificial neural networks are networks of artificial neurons. An artificial neuron is a simplified model of a biological neuron. It takes inputs, performs computation on those inputs, and produces an output. Connect a whole bunch of such neurons together and you get a neural network.

“Training involves adjusting the strength of the connections between the artificial neurons.”

Let’s say you have a problem to solve—meaning you want to turn a specific input into a particular output. For example, your input is an image of a cat, and you want the word “cat” to be the output. If you have many examples of images of cats that have been labeled as such by human annotators, then you can use these images to train a neural network, teaching the network how to take an image as input and produce the label as output. But what does training mean? Training involves adjusting the strength of the connections between the artificial neurons: in essence, telling one neuron whether it should listen to another neuron or, rather, how much importance or weight it should give the output of another neuron.

I’m glossing over the details, but essentially, training a neural network involves giving the network enough labeled or annotated data so that it can adjust the strength of its connections between neurons, almost always producing the correct output. Once trained, such a network can be shown an unlabeled image—we don’t know if it’s an image of a cat or not—and the network will likely produce the correct answer.

When I built my first neural network and saw it learning—detecting patterns in the data it was fed and embedding knowledge of those patterns into the network—I was blown away. None of this would be surprising to machine learning practitioners or scientists, but to a newbie, it was revelatory. Machines can learn.

2. The math behind machine learning and AI is beautiful.

Cool, elegant theorems and proofs are the reason why machines can learn. Math makes machine learning and AI possible.

In the late 1950s, a Cornell University psychologist named Frank Rosenblatt designed something he called a perceptron. It was the world’s first artificial neuron that could learn. Rosenblatt developed an algorithm that taught the neuron how to distinguish between, say, two numbers or two letters. In essence, the neuron learned how to draw a straight line between two data clusters, where one cluster represented a specific digit (let’s say zero), and another cluster represented a different digit (let’s say nine). When the neuron is given a new, previously unseen or unclassified image, it simply checks to see if the image falls to one side of the line or the other and classifies it as a zero or a nine.

“The proof is simple and elegant.”

The real power of this simple algorithm came from the mathematical proof that followed: others were able to show that if a straight line exists that can separate two clusters of data, then Rosenblatt’s perceptron will find one such line in finite time. Meaning, it will always learn to distinguish between the two classes of data. It will converge to a solution, and hence its name: the perceptron convergence proof. Such certainties are like gold in computer science.

The proof is simple and elegant. It uses basic linear algebra to manipulate vectors, which are just sequences of numbers. When I learned of this proof, I realized I wanted to write Why Machines Learn. There was so much beauty in the math, and I felt compelled to communicate that to readers. Such proofs are mathematical codas at the end of each chapter.

3. The math behind machine learning is relatively simple.

Machine learning involves the kind of math you learn as early as high school. If one has a basic understanding of algebra, vectors, and matrices, some calculus, and some knowledge of probability and statistics, then getting under the mathematical skin of machine learning is an enjoyable breeze. It is still possible to conceptually understand the ideas behind Why Machines Learn without this mathematical background. Still, if you did calculus, algebra, and trigonometry during your education, that’s more than enough.

I think of my book as something along the lines of The Theoretical Minimum series of books by physicist Leonard Susskind. His books explain the theoretical minimum math needed to understand classical physics or quantum physics. My book aims to communicate the theoretical minimum math needed to understand machine learning or modern artificial intelligence. To this end, I don’t eschew equations, as is the norm in popular science books; I embrace them hoping that readers will find them beguiling, too.

4. Machine learning is much more than ChatGPT.

I started writing Why Machines Learn well before ChatGPT entered the lexicon of popular culture. Even so, modern AI is often used synonymously with deep neural networks. ChatGPT is one kind of deep neural network. But machine learning is a vast field, populated by algorithms that were ruling the roost before deep neural networks became the force that they are today—thanks to enormous amounts of data for training such networks, coupled with enormous amounts of computing power. Therein lies the story of machine learning.

Why Machines Learn begins with Frank Rosenblatt’s perceptron, which was the first so-called single-layer neural network. Scientists in the 1960s did not know how to train neural networks that had more than one layer. In fact, there was an elegant mathematical proof showing that single-layer neural networks could not solve certain kinds of problems. For example, let’s say you must delineate two clusters of data but cannot draw a straight line between the two clusters; rather, you can only separate them by drawing a curve or non-linear boundary. Perceptrons could not find such a curve. As a result of this proof, interest in neural network research came to a standstill. Only a few people kept the faith, believing that neural networks would eventually be able to solve such problems.

“Scientists in the 1960s did not know how to train neural networks that had more than one layer.”

Meanwhile, however, others were developing non-neural network-based machine learning algorithms. Why Machines Learn delves into the historical and mathematical arc of such advances.

By the 1980s, people figured out how to train neural networks with multiple layers of artificial neurons. Such networks were called deep neural networks. By the 2010s, deep neural networks would dominate machine learning. Now, they are all the rage.

5. Deployment of machine learning algorithms needs diverse involvement.

Leaving the deployment of machine learning algorithms solely in the hands of its practitioners, such as those working at big companies, is a bad idea. We need another layer of society—comprising policymakers, teachers, science communicators, and users of the technology—to come to grips with the math of machine learning. If we are to effectively use and regulate this powerful, disruptive, and potentially dangerous technology, we need to understand the source of its power and its limitations. The key to such insights lies in math.

To listen to the audio version read by author Anil Ananthaswamy, download the Next Big Idea App today: