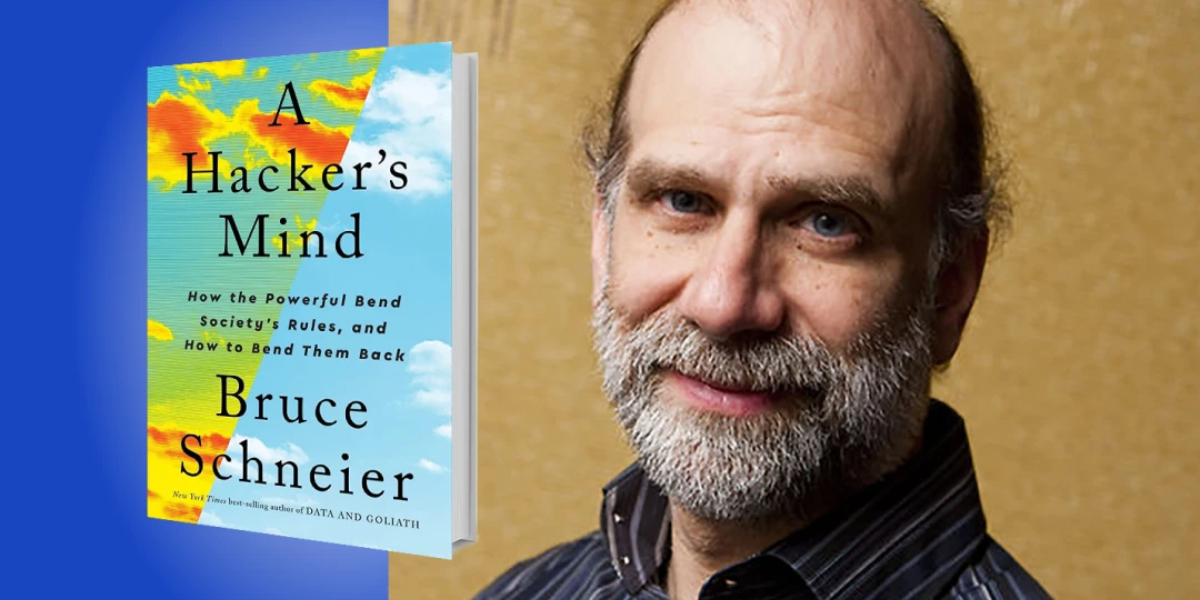

Bruce Schneier is a Lecturer in Public Policy at the Harvard Kennedy School. He is a cryptographer, computer security professional, and privacy specialist. He has been called a “security guru” by The Economist.

Below, Bruce shares 5 key insights from his new book, A Hacker’s Mind: How the Powerful Bend Society’s Rules, and How to Bend them Back. Listen to the audio version—read by Bruce himself—in the Next Big Idea App.

1. Hacking is ubiquitous.

We normally think about hacking as something done to computers, but any system of rules can be hacked. Take the tax code as an example. It’s not computer code, but its code is a series of rules, of algorithms. It has vulnerabilities—we call them loopholes. It has exploits—we call them tax avoidance strategies. And there is an industry of black hat hackers finding exploitable vulnerabilities, whom we call tax attorneys and tax accountants.

So, what is a hack? A hack is something that the system permits but is unanticipated and unwanted by the designers. This is a subjective term that encompasses a notion of novelty and cleverness. It’s an exploitation or a subversion. Hacks follow the rules of a system but subvert its goals or intent. My definition is deliberately general. It covers hacks against computer systems, against social, political, and economic systems (like the tax code), the market economy, our systems of passing laws, or our systems of electing leaders.

All systems can be hacked. Even the best thought-out sets of rules will be incomplete and inconsistent. They’ll have ambiguities. As long as there are people who want to subvert the goals of a system, there will be hacks.

For instance, in 1975, a team showed up on the Formula 1 racetrack with a six-wheeled car. Everyone thought it was against the rules, but it turned out that the rule book was silent about the number of wheels a car could have. Or consider the hacking of frequent flyer programs—remember mileage runs? Our systems of banking and finance have been hacked: NOW accounts in the 1970s, or hacks against the 2010 Dodd-Frank regulations. Financial exchanges are regularly hacked: insider trading, front running, high frequency trading.

2. Hacks often become normalized.

When we think of hacks, we think of them as being quickly blocked by the system designers. This is true for computer systems, but with other systems, there’s often a longer and less formal process. The hack is discovered. It’s used. It becomes more popular. The governing system learns about it and then they do one of two things. They either change the system to prevent the hack or they incorporate the hack into the system so that the hack becomes the new normal.

“Many of the things we think are normal started out as hacks.”

The governing body of Formula 1 racing updated their rule book to prohibit any more than or any less than four wheels on a car. But many of the things we think are normal started out as hacks. Consider hacks on banking and finance. Hedge funds are filled with hacks and most of them became the new normal. Or think of hacks on our political systems. The filibuster is a hack that was invented by a Roman senator in 60 BCE and has been normalized in the United States Senate.

3. Hacks are most effectively perpetrated by the rich and powerful.

We normally think of hacking as something the disempowered do against powerful systems, but the National Security Agency is probably the world’s most effective hacker. They have the budget, the expertise, and for them it is legal.

It’s far more common that the wealthy hack systems to their own advantage. The wealthy are better able to discover hacks because they can devote more resources to the task. They can buy expertise. They’re better able to leverage hacks to use them, and their hacks are more likely to become normalized because the wealthy can also influence decision makers. Think about gig economy companies hacking local regulations, like Uber and Airbnb.

4. Hacking is how systems develop, adapt, and evolve.

Hacking is about finding novel failure modes. When they actually work, they have unexpected outcomes. And this can be positive. Hacks are declared either legal or illegal by more general systems, like governing bodies for professional sports, the tax authorities, or governments. Systems can evolve in this manner.

There are many ways observant people have hacked our 2000-year-old body of laws to function in the modern age. That’s an example of system evolution. This process is easier if there’s a single governing body in charge, like the Formula 1 racing administrators, or an airline facing frequent flyer hacks, or Microsoft battling software hacks. If there’s no governing body, we need alternate ways for society to adjudicate hacks. One such alternative is our court system.

“An evolution through hacking can be faster and more efficient than through the conventional mechanisms of updating sets of rules.”

Courts adjudicate hacks. Judges decide which hack should be normalized and which should be prohibited. You come up with a novel way of interpreting a law that no one’s ever seen before. Someone says you can’t do that. You both go in front of a judge and the judge decides. This is how a lot of our systems incorporate beneficial hacks. This is how our legal system adapts to changing circumstances.

An evolution through hacking can be faster and more efficient than through the conventional mechanisms of updating sets of rules. It incorporates the adversary into the system and, harnessed well, hacking accelerates system evolution.

5. Computers are accelerating societal hacking.

People have been hacking systems for thousands of years, but recently things have changed. Socio-technical systems are increasingly mediating all human interactions, which means computers are part of many human interactions, and those computers can be hacked directly. So hacking computer systems has merged with hacking societal systems, and this affects those systems in many ways. Technical and societal changes happen faster when computers do it, so hacking is now faster. The scale is also larger: the magnitude of these effects is greater, so the impact and potential for harm is greater. And scope is widened because these systems are broader, so the effects are broader.

To a lesser extent, the appetite for fixing any of this is at a low. There’s a belief that government adjudication of hacks is somehow bad, and a sense of social opprobrium, which acted as a limiting factor, is now basically gone.

AI and robotics will make all of this worse—specifically AI as a hacker. AIs will hack our systems in the way that humans do. Already, machine learning is finding vulnerabilities in computer code. It’s not very good at it, but it will get better. Imagine feeding an AI the country’s tax codes and telling it to find useful and profitable hacks? Will it find ten, a hundred, or maybe a thousand hacks? How will that work?

“We need to figure out how to harness hacking for social progress without destroying society in the process.”

There’s a story about Volkswagen. Volkswagen was caught cheating on emission control tests. This was not a computer-identified hack, but a human-identified hack. The engineers at Volkswagen programmed their engine to recognize an emission control test and behave differently when being tested. This hack remained undiscovered for ten or so years because it is hard to figure out what computers are doing. Imagine feeding some AI system the goal of maximizing engine performance while undergoing emission control tests. It would come up with this hack independently. We might not know about it, because we don’t know the computer code. These are the sorts of hacks that will happen because the goals humans instruct to AI are always under specified, and AI systems will naturally hack in the way that humans do. The difference is humans know when they’re hacking.

We need to figure out how to harness hacking for social progress without destroying society in the process. Hacks are increasingly common. They’re now the fastest way to modify systems and gain advantage. We need to make our social systems more resilient and agile, in response to changing circumstance, and hacking is a good thing if properly constrained.

How do we build governing systems that can quickly adjudicate hacks? Can we develop a framework that can decide when it is useful to prevent or encourage hacking? Can we provide a more democratic hacking environment where everyone has an equal opportunity to hack systems and for those hacks to be normalized, instead of one biased towards money and power?

To listen to the audio version read by author Bruce Schneier, download the Next Big Idea App today: