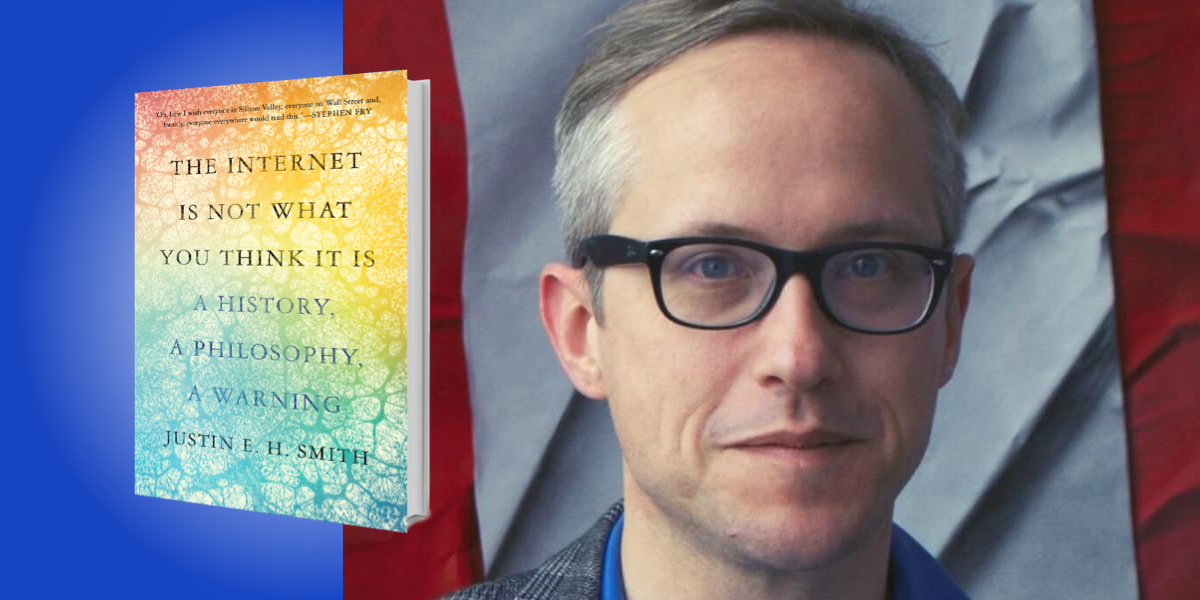

Justin E. H. Smith is professor of history and philosophy of science at the University of Paris. His books include Irrationality: A History of the Dark Side of Reason; The Philosopher: A History in Six Types; and Divine Machines: Leibniz and the Sciences of Life.

Below, Justin shares 5 key insights from his new book, The Internet Is Not What You Think It Is: A History, a Philosophy, a Warning. Listen to the audio version—read by Justin himself—in the Next Big Idea App.

1. The internet is not what you think it is.

Of course, the internet is many things—we pay bills on it, we can look at distant galaxies through NASA telescopes—but that’s not the part I’m talking about. I’m talking about the part that we use in our daily lives, the part that has dopamine reward systems built into it that lead to addiction. I’m talking about social media networks, which are not truly the place we go to pursue greater understanding of issues, and to share arguments with our adversaries in good faith. The internet offers only a simulation of a deliberative neutral space for the pursuit of something like what the German philosopher Jürgen Habermas would call “deliberative democracy.”

In fact, you might say that Twitter is, to rational deliberation, very much like what Grand Theft Auto is to chasing stolen cars. It bears a thematic connection to the thing in question, but in both cases, it’s a video game simulation. Twitter is a deliberation-themed video game, and the same goes for other social media platforms. But as long as privately owned social media is the only game in town, we have no other venue to go to. We can publish pamphlets in our basement and distribute them on a street corner, but nobody is going to know as long as the one path to effective, meaningful exchange is the algorithmically driven, privately held, for-profit venue of social media. Effectively, democracy is under serious threat. And for this reason, one ought to be very wary.

2. Why do we take the internet to be a glue of civil society, even though it obviously is not?

The arc of the dream of a society in which peace and rational deliberation prevail is very long, much longer than we tend to think of it. I would say that it begins in the 1670s and it dies in the 2010s. It’s around 1678 that Gottfried Wilhelm Leibniz develops a functional model of what he calls a “reckoning engine,” a mechanical device with gears and wheels and knobs that is suitable for any task of arithmetical calculation. You might think that’s a long way from being a computer, let alone a networked computer, but Leibniz recognizes that anything that can do arithmetic can also do binary—that is to say, it can crunch zeros and ones just as it can crunch all the natural numbers. So Leibniz understands that at least in principle, his reckoning engine does everything a computer can do, even though the proof of concept only comes along in the 1830s with the analytical engine of Charles Babbage and Ada Lovelace. Moreover, Leibniz also recognizes that there’s no reason why two such machines that are at a great distance from one another could not be connected by some mechanism that would facilitate telecommunication. So even though the various genetic strands of the internet would take a few more centuries to come together, the idea was there.

“The arc of the dream of a society in which peace and rational deliberation prevail is very long, much longer than we tend to think of it. I would say that it begins in the 1670s and it dies in the 2010s.”

Now, Leibniz was also a diplomat, and his true lifelong project was reconciling the two primary groups of Christians in Europe after the Protestant Reformation and the Wars of Religion. He had huge conciliatory goals in the political and ecclesiastic spheres, and he sincerely believed that someday soon, once the machines were configured correctly, we would be able to punch in the arguments of two parties in conflict—say, two empires or the two churches—and the machines would simply tell us who was right. And in this way, there would never be any enduring conflicts, and perpetual peace will reign. It was the ultimate expression of optimism in what the mechanization of reason might deliver to us.

And that dream is long. I remember the tail end of the dream from the 1990s and the aughts, when people were still talking about the figure of the “netizen,” someone who is able to engage in democratic participation much more effectively because of the mediation of the internet. And in 2011, in the very first hopeful moments of the Arab Spring, people were talking about Twitter as the great motor of democratic transformation in the Middle East.

But I see 2011 as the first clear moment of the disintegration of this centuries-old dream. When the various Arab Spring revolutions descended into blood baths, other important things were happening around the same time. It’s around that time that I first noticed that my newsfeed on Facebook was no longer showing me whatever news the people in my network happened to put up. Rather, certain posts were being given preference based on algorithms—which I was not allowed to know, because they were corporate secrets.

Soon enough, this already bad situation escalated into a steady flow of misinformation through algorithmic preference. The feed became cluttered with information from operations that were dedicated, above all, to virality—to, as we say today, “gaming the algos” rather than simply communicating in a neutral public space. By 2016, we have Brexit, we have an internet troll elected president of the most powerful country in the world, and we have many other moments that made it impossible to hold on to Leibnizian optimism.

“We have Brexit, we have an internet troll elected president of the most powerful country in the world, and we have many other moments that made it impossible to hold on to Leibnizian optimism.”

3. The idea of the internet is much older than you think.

The internet comes into being as an idea in the 1670s. But in many ways, the full history of the internet is continuous with the hundreds of millions of years of evolution—of natural networks, such as the mycorrhizal networks connecting the roots of trees. Telecommunication networks in nature don’t only exist in tree roots; sperm whale clicks can be heard literally around the world, from the Atlantic Ocean to the Pacific. The pheromone signaling between species of moths is also telecommunicative. Telecommunication is something that has always existed as an aspiration and also, to some extent, as a reality for humans, through long-distance trade networks and so on.

The fact that these networks are later connected by wires, and then wirelessly, doesn’t make a significant or essential difference for thinking about the phenomenology or the cognitive and social dimension of long-distance information exchange. This connectedness is not exceptional in our species—it’s something that we see throughout nature. And whether we think of that connectedness as an analogy or as the literal truth is ultimately up to us. Personally, I think we can see it either way.

4. The Industrial and Information Revolutions (arguably) happened at the same time.

There’s an important development in 1808: the Jacquard loom. It’s for embroidering patterns such as flowers into silk, and it uses punch cards. Though it lacked memory, this was in many ways the first true computer, the first machine that provides the model for what Ada Lovelace’s analytical engine will soon be doing.

This means that, in effect, the Information Revolution and the Industrial Revolution are co-natal—they come at the same time, rather than the Industrial Revolution coming first and then the Information Revolution beginning in the 20th century. It’s also significant because Lovelace, like other of her contemporaries, is captivated by the analogy of weaving. She even says that the analytical engine is to algebra what the Jacquard loom is to silk. She also notices that a loom is effectively an interface between artifice and nature; it’s the point at which the stuff that comes from silkworms meets human artifice and turns into an industrial product.

“I reject the currently fashionable argument that the entire natural world is a virtual reality simulation.”

For her to see that machine as the model of the computer, and to see the analogy between the artificial and the natural, is significant also for many contemporary debates about modeling and simulation. In the book I reject the currently fashionable argument that the entire natural world is a virtual reality simulation. I argue against that view as it’s formulated by Nick Bostrom and Dave Chalmers among others, and I try to show the reason why a bit more sophistication in the history and philosophy of science is needed to understand what’s really going on when people try to mobilize what are, in the end, analogies.

5. We are fully dependent on the internet—and that’s changing everything.

Wikipedia has fundamentally rewired my own cognitive apparatus. I feel like I have an immediate external prosthesis of my faculty of curiosity. Whenever I have the slightest fleeting question in my mind about some medieval Byzantine heresy or about what a quasar is, I immediately look it up, and within 10 or 15 seconds, I have some basic understanding of what hesychasts are, for example. Over time, this has fundamentally altered my relationship to knowledge, and also my understanding of what it means to “know” at all.

This is a revolution comparable to the arrival of the printing press. That moment was revolutionary in that it was cause of such things as the Protestant Reformation. But also, there was suddenly this possibility of having on hand all sorts of prosthetic devices that contain knowledge: books. And as a result, these wonderful, beautiful cognitive practices—such as Frances Yates’s “art of memory,” a complex medieval practice of learning elaborate mnemonics for the internalization of whole fields of knowledge—were simply lost.

Today, we are also in the course of losing something. I believe we are in the course of losing reading, even though things like book contests are trying to hold onto it. A revolution is underway, and it’s comparable to revolutions that have happened in the past. Old cognitive practices are being lost, new ones are on the horizon, and those of us who learned the old ones, like reading entire books from cover to cover, are feeling sad. So my book attempts to take honest personal stock of what is being lost and what is being gained with the internet.

To listen to the audio version read by author Justin E. H. Smith, download the Next Big Idea App today: