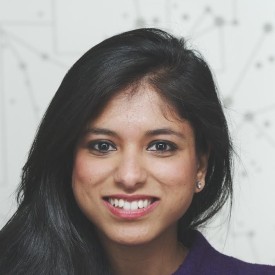

Madhumita Murgia is an award-winning Indian-British journalist and commentator who writes about the impact of technology and science on society. She is currently AI Editor at the Financial Times in London, where she leads global coverage of artificial intelligence and other emerging technologies. She has spent the past decade traveling the world writing about the people, start-ups, and corporations shaping cutting-edge technologies for publications including WIRED, The Washington Post, Newsweek, and the Telegraph. Murgia co-hosts the podcast series Tech Tonic.

Below, Madhumita shares five key insights from her new book, Code Dependent: Living in the Shadow of AI. Listen to the audio version—read by Madhumita herself—in the Next Big Idea App.

1. Human stories matter.

In a decade of reporting on AI, there were very few stories of real people affected by the technology and the consequences of their entanglements with AI. The ones I did read were almost never from outside the Western world.

I’ve spent much of my journalistic career chronicling the fortunes of corporate giants like Google and Meta, who have refined the gushing data reserves that are pouring into their platforms (generated by billions of people around the world) and turned them into products. From story after story, I discovered how a handful of engineers in Silicon Valley had an outsized impact on those billions in far-flung corners of the world, shaping everything from personal relationships to our ideas of democracy. Code Dependent is less about the creators of these technologies and more about the rest of us, the ordinary people whose daily lives have been transformed by AI software. These stories of gig workers, doctors, poets, parents, activists, and refugees are drawn from nine countries, ranging from the U.S. and the UK to China, India, Kenya, and Argentina.

Code Dependent is my attempt to make this invisible world of people’s encounters with AI tangible for readers. My hope is to foster a deeper understanding of the realities of AI and start important conversations about how we live alongside this powerful technology.

2. Spotlighting those in the shadows.

When I started reporting this book, I was agnostic about whose stories I would choose. I went looking generally for nuanced encounters between AI and people around the world. But over the course of reporting, it became clear that many of the stories I was uncovering belong to people at the fringes, people of color, those living in poverty, women, migrants, refugees, and religious minorities in Kenya and Bulgaria. I spent time with a community of data labelers, a hidden workforce in the global south, who helped train and shape the AI systems we interact with through Meta, OpenAI, Walmart, and Tesla.

“These stories show us what happens when societal biases are woven into AI software.”

In Amsterdam, Diana Gio, an immigrant and single mother, finds her sons on a list curated by an algorithm that predicts that her sons will go on to commit serious crimes in the future. Those who are contributing to AI out of sight and those adversely affected by it are often marginalized, while advantages conferred by the technology are often enjoyed by privileged majorities. These stories show us what happens when societal biases are woven into AI software. There is great consequence to technology that devalues human agency and is implemented without the contribution of the communities it impacts.

3. Techno-solutionism (the idea that we can fix everything with technology) is a myth.

AI and tech entrepreneurs view AI as a solution to some of society’s biggest challenges, including disease, climate change, and poverty, but this belief often runs into dead ends.

For example, in Salta, a small town in northern Argentina, the city government teamed up with Microsoft to create a predictive algorithm that estimates (with 86 percent accuracy) which teenage girls get pregnant in a low-income community on the city’s outskirts. The AI system ended up marking one in three families as likely to have a teen pregnancy, showing simply that community-wide interventions like better investment into social support and employment opportunities were needed. They didn’t have to collect intermittent invasive data from young girls in that neighborhood to prove it.

4. The importance of human agency.

In one of the most uplifting stories I uncovered, I spoke with Dr. Ashita Singh, a doctor in rural India who served a population of indigenous communities known as the Beal tribe. She provided healthcare to those who needed it the most. Dr. Singh was also testing and helping develop an AI system that could diagnose tuberculosis simply by looking at an X-ray image.

Dr. Singh taught me about the importance of preserving human agency and expertise as we automate the most human parts of our society, be that criminal justice, education, or healthcare. She showed how the work of the best doctors wasn’t to be replaced but augmented. Gaps can be filled using AI technologies, but human aspects of care (being able to listen, empathize, and speak with patients) can never be replaced by technology.

“She showed how the work of the best doctors wasn’t to be replaced but augmented.”

I also uncovered the importance of accountability—the question of who holds the pen at the end of the day. When AI systems go wrong, when they make the errors expected of statistical systems, it’s important to identify who ultimately is accountable for the harm those system errors cause.

AI can weaken human agency. When I explore the stories of deep fakes, particularly deep fake pornography, I heard from Helen Mort, a poet in Northern England, and Noelle Martin, a student in Sydney, Australia, who talked about the deep personal impact that deepfake pornography has had on their lives. It made them feel not just vulnerable but also weak because of the lack of human agency to try and correct the harms of deep fakes. But through these stories, you also see how human agency can be strengthened, particularly in the collective. For instance, when looking at gig work, you see unions and activists coming together to fight back against faceless AI systems.

5. Data colonialism and global participation.

A few powerful players are able to exploit and extract data in a new era of data colonialism. They do this using data from people in both the global south (or other poorer parts of the world) as well as from people in Western countries.

For example, there are many creatives whose writing, music, images, and art have been scraped to build generative AI systems. This concept of data colonialism, which was proposed by the academic Nick Cadry, shows how power will only be further concentrated in the era of AI as more and more data is extracted from people around the world. It serves as a warning about how we can look toward resolving these issues.

My biggest takeaway is that the more diverse voices we include (and that includes people from non-Western countries, people from religious minorities, migrants, and rural and urban citizens), the more holistic, the more positive the outcomes of AI systems can be. My quest to discover how AI had transformed lives changed me, too. The most inspirational parts of the stories I uncovered were not the sophisticated algorithms and their outputs but the human beings using and adapting to the technology: the doctors, scientists, gig workers, and creatives who represent the very best of us. While I remain optimistic about the potential value of AI, I believe that no matter how exceptional a tool is, it only has utility when it preserves human dignity. My ultimate hope for AI isn’t that it creates a new upgraded species but that it helps us ordinary, flawed humans live our best and happiest lives.

To listen to the audio version read by author Madhumita Murgia, download the Next Big Idea App today: